[ad_1]

Backside line: Nvidia took the wraps off its Hopper structure at GTC 2022, asserting the H100 server accelerator however solely displaying off renders of it. Now we lastly have some in-hand images of the SXM variant of the cardboard, which encompasses a mind-boggling 700W TDP.

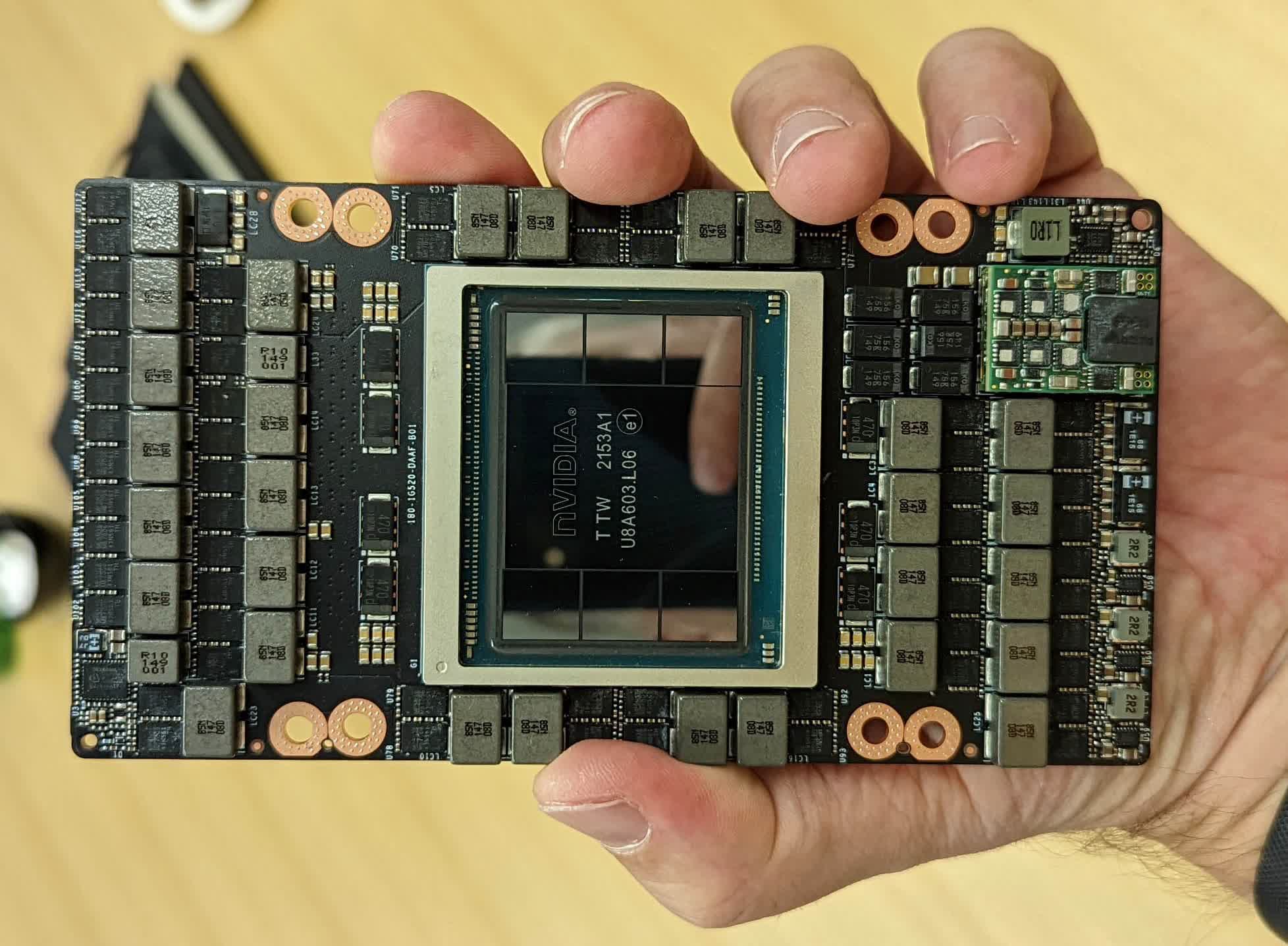

It has been a bit over a month since Nvidia unveiled their H100 server accelerator based mostly on the Hopper structure, and thus far, we have solely seen renders of it. That adjustments right now, as ServeTheHome has simply shared photos of the cardboard in its SXM5 type issue.

The GH100 compute GPU is fabricated on TSMC’s N4 course of node and has an 814 mm2 die dimension. The SXM variant options 16896 FP32 CUDA cores, 528 Tensor cores, and 80GB of HBM3 reminiscence linked utilizing a 5120-bit bus. As may be seen within the pictures, there are six 16GB stacks of reminiscence across the GPU, however one among these is disabled.

Nvidia additionally quoted a staggering 700W TDP, 75% increased than its predecessor, so it is no shock that the cardboard comes with an extremely-impressive VRM resolution. It options 29 inductors, every outfitted with two energy phases and a further three inductors with one energy stage. Cooling all of those tightly packed parts will most likely be a problem.

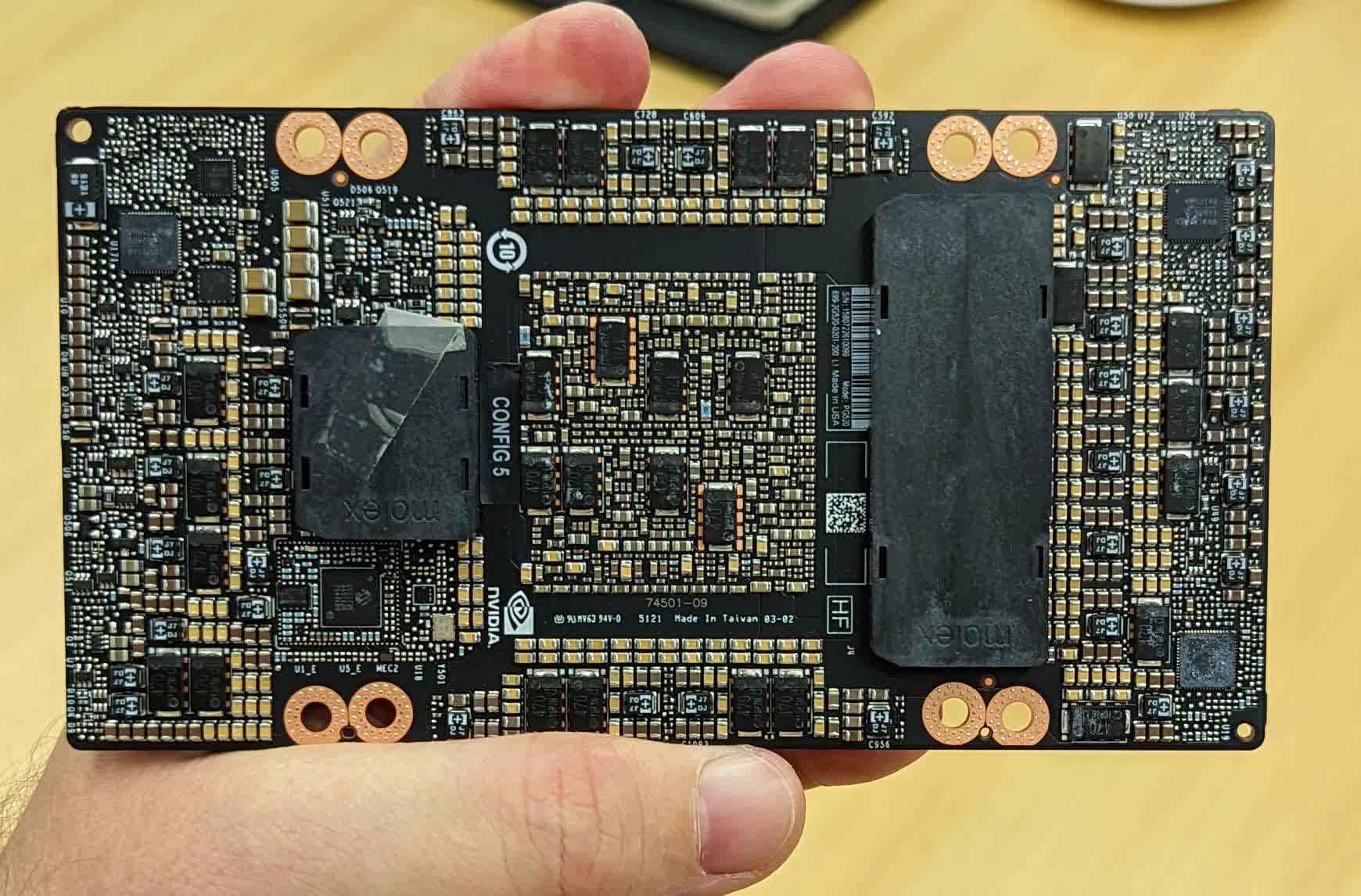

One other noticeable change is the connector format for SXM5. There’s now a brief and a protracted mezzanine connector, whereas earlier generations featured two identically sized longer ones.

Nvidia will begin transport H100-equipped techniques in Q3 of this 12 months. It is value mentioning that the PCIe model of the H100 is at present listed in Japan for 4,745,950 yen ($36,300) after taxes and transport, though it has fewer CUDA cores, downgraded HBM2e reminiscence, and half the TDP of the SXM variant.

[ad_2]

Source link