[ad_1]

Ten folks have been murdered this weekend in a racist assault on a Buffalo, New York grocery store. The eighteen-year-old, white supremacist shooter livestreamed his assault on Twitch, the Amazon-owned online game streaming platform. Regardless that Twitch eliminated the video two minutes after the violence started, it was nonetheless too late — now, ugly footage of the terrorist assault is brazenly circulating on platforms like Fb and Twitter, even after the businesses have vowed to take down the video.

On Fb, some customers who flagged the video have been notified that the content did not violate its rules. The corporate informed TechCrunch that this was a mistake, including that it has groups working across the clock to take down movies of the taking pictures, in addition to hyperlinks to the video hosted on different websites. Fb mentioned that it is usually eradicating copies of the shooter’s racist screed, and content material that praises him.

However after we searched a time period so simple as “footage of buffalo taking pictures” on Fb, one of many first outcomes featured a 54-second display screen recording of the terrorist’s footage. TechCrunch encountered the video an hour after it had been uploaded and reported it instantly. The video wasn’t taken down till three hours after posting, when it had already been seen over a thousand occasions.

In principle, this shouldn’t occur. A consultant for Fb informed TechCrunch that it added a number of model of the video, in addition to the shooter’s racist writings, to a database of violating content material, which helps the platform establish, take away and block such content material. We requested Fb about this explicit incident, however they didn’t present further particulars.

“We’re going to proceed to study, to refine our processes, to make sure that we will detect and take down violating content material extra rapidly sooner or later,” Fb integrity VP Man Rosen mentioned in response to a query about why the corporate struggled to take away copies of the video in an unrelated name on Tuesday.

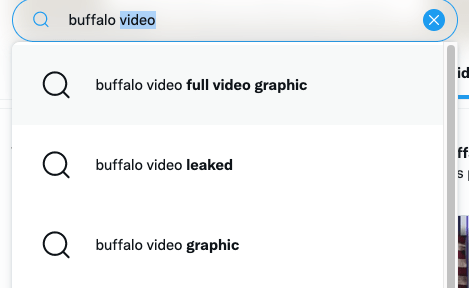

Reposts of the shooter’s stream have been additionally simple to search out on Twitter. In reality, after we typed “buffalo video” into the search bar, Twitter advised searches like “buffalo video full video graphic,” “buffalo video leaked” and “buffalo video graphic.”

Picture Credit: Twitter, screenshot by TechCrunch

We encountered a number of movies of the assault which have been circulating on Twitter for over two days. One such video had over 261,000 views after we reviewed it on Tuesday afternoon.

In April, Twitter enacted a policy that bans particular person perpetrators of violent assaults from Twitter. Below this coverage, the platform additionally reserves the proper to take down multimedia associated to assaults, in addition to language from terrorist “manifestos.”

“We’re eradicating movies and media associated to the incident. As well as, we could take away Tweets disseminating the manifesto or different content material produced by perpetrators,” a spokesperson from Twitter informed TechCrunch. The corporate referred to as this “hateful and discriminatory” content material “dangerous for society.”

Twitter additionally claims that some customers are trying to bypass takedowns by importing altered or manipulated content material associated to the assault.

In distinction, video footage of the weekend’s tragedy was comparatively troublesome to search out on YouTube. Fundamental search phrases for the Buffalo taking pictures video principally introduced up protection from mainstream information shops. With the identical search phrases we used on Twitter and Fb, we have been capable of establish a handful of YouTube movies with thumbnails of the taking pictures that have been really unrelated content material as soon as clicked by way of. On TikTok, TechCrunch recognized some posts that directed customers to web sites the place they may watch the video, didn’t discover the precise footage on the app in our searches.

Twitch, Twitter and Fb have acknowledged that they’re working with the World Web Discussion board to Counter Terrorism to restrict the unfold of the video. Twitch and Discord have additionally confirmed that they’re working with authorities authorities which can be investigating the scenario. The shooter described his plans for the taking pictures intimately in a personal Discord server previous to the assault.

Based on paperwork reviewed by TechCrunch, the Buffalo shooter determined to broadcast his assault on Twitch as a result of a 2019 anti-semitic taking pictures at Halle Synagogue remained reside on Twitch for over thirty minutes earlier than it was taken down. The shooter thought of streaming to Fb, however opted to not use the platform as a result of he thought customers wanted to be logged in to look at livestreams.

Fb has additionally inadvertently hosted mass shootings that evaded algorithmic detection. The identical 12 months because the Halle Synagogue taking pictures, 50 folks have been killed in an Islamophobic assault on two mosques in Christchurch, New Zealand, which streamed for 17 minutes. At the least three perpetrators of mass shootings, together with the suspect in Buffalo, have cited the livestreamed Christchurch bloodbath as a supply of inspiration for his or her racist assaults.

Fb famous the day after the Christchurch shootings that it had eliminated 1.5 million movies of the assault, 1.2 million of which have been blocked upon add. After all, this begged the query of why Fb was unable to instantly detect 300,000 of these movies, marking a 20% failure price.

Judging by how simple it was to find movies of the Buffalo taking pictures on Fb, it appears the platform nonetheless has a protracted solution to go.

[ad_2]

Source link