[ad_1]

As extra generative AI improvements turn out to be obtainable, the query of what’s reliable turns into extra acute. Salesforce Analysis & Insights just lately carried out analysis that exhibits AI is extra trusted and fascinating when people work in tandem with AI. The crew collected greater than 1,000 generative AI use circumstances at Dreamforce and hosted deep-dive conversations with 30 Salesforce patrons, admins, and finish customers. Outcomes present prospects and customers do imagine within the potential of AI to extend effectivity and consistency, carry inspiration and success, and enhance how they manage their ideas. However, all prospects and customers within the examine reported that they aren’t prepared to completely belief generative AI and not using a human on the helm.

So, what does that imply for admins who’re chargeable for influencing how generative AI exhibits up for people? Allow us to share some context and steerage for tactics to help human-generative AI interplay.

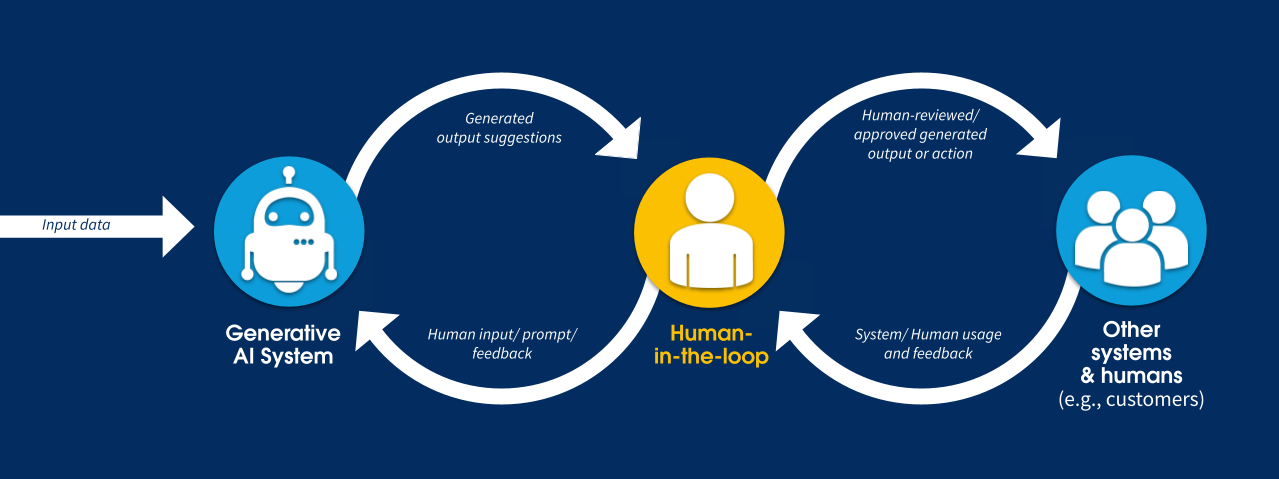

What’s a ‘human within the loop’?

Initially utilized in navy, nuclear power, and aviation contexts, “human within the loop” (HITL) referred to the flexibility for people to intervene in automated programs to forestall disasters. Within the context of generative AI, HITL is about giving people a chance to evaluation and act upon AI-generated content material. Our latest research reveal, nevertheless, that in lots of circumstances, people — staff at your group or enterprise — ought to be much more thoughtfully and meaningfully embedded into the method because the “human-at-the-helm.”

Human involvement will increase high quality and accuracy and is the first driver for belief

An vital studying from this analysis is that prospects and customers perceive generative AI isn’t excellent. It may be unpredictable, inaccurate, and at occasions could make issues up. Not understanding the info supply or the completeness and high quality of that information additionally degrades prospects’ and customers’ belief. To not point out, the educational curve for how one can most-effectively immediate generative AI is steep. With out efficient prompts, customers acknowledge that their possibilities of reaching high quality outputs are restricted. In relation to finessing outputs, prospects and customers imagine that individuals carry expertise, experience, and “humanness” that generative AI can’t.

For almost all use circumstances, prospects and customers imagine there must be at the least an preliminary trust-building interval that entails excessive human contact. Of the 90 use circumstances collected in a single analysis session, solely two have been applicable for full autonomy. HITL is particularly vital for compound (i.e., “Do X, based mostly on W” or “Do X, then do Y”), macro-level (e.g., “outline a gross sales forecasting technique”), or excessive danger duties (e.g., “e mail a high-value buyer” or “diagnose an sickness”).

Conditions calling for elevated human contact can embrace:

- When the info is supply is unknown or untrusted.

- When the output is for exterior use.

- When utilizing the output as a closing deliverable.

- When utilizing the output to impersonate (e.g., a script for a gross sales name) fairly than inform (e.g., details about a prospect to devour forward of a chilly name).

- When the person continues to be studying immediate writing, since poor prompts result in extra effort to finesse outputs

- For private, bespoke, persuasive outputs (e.g., a advertising marketing campaign).

- When inputs wanted to attain desired output are qualitative, nuanced, implicit (not codified).

- When inputs wanted are latest (not but within the dataset).

- When customers have skilled generative AI errors earlier than (low-trust environments).

- When stakes are excessive (e.g., excessive monetary influence, broad attain, danger to finish client, authorized danger, when it’s not socially acceptable to make use of AI).

- When people are doing the job properly right now (and the software is supposed to supercharge these people, not fill a spot in human assets).

In these conditions, extra deeply involving a human within the decision-making will help us obtain accountable AI by bettering accuracy and high quality (people can catch errors, add context or experience, and infuse human tone), guaranteeing security (people can mitigate moral and authorized dangers), and selling person empowerment. All of the whereas, human enter spurs steady enchancment of the generative AI system and helps prospects and customers construct belief with the expertise.

Constructing Belief Takes Time

Belief is probably the most vital facet of generative AI, however belief isn’t a one-time dialog. It’s a journey that takes time to domesticate however may be fast to interrupt. Practically all analysis members shared experiences of trusting generative AI that in the end failed them. Clients hope for a dependable sample of accuracy and high quality over time.

To domesticate belief over time and assist customers construct confidence in AI expertise, our analysis has proven generative AI programs ought to be designed to preserve people on the helm — particularly throughout an preliminary trust-building or onboarding interval — and allow steady studying for each the person and the machine. Via these experiences: Thoughtfully contain human judgment and experience; assist the human perceive the system by being clear and trustworthy about how the system works and what its limitations are; afford customers the chance to offer suggestions to the system; and design the system to absorb alerts, make sense of customers’ wants, and information customers in how one can successfully collaborate with the expertise.

Uphold the worth proposition of effectivity

Most compelling to analysis members is generative AI’s promise to cut back time on process. Clients warned that they may abandon or keep away from programs that undermine this worth proposition. The analysis revealed a number of methods to make sure effectivity:

- To attenuate effort modifying outputs: information customers in writing efficient prompts. Assist them establish the place and how one can add their experience.

- To restrict “swivel-chairing” between instruments: help customers in micro-editing outputs from inside the generative AI system. Instance: give customers a approach to select from output choices, re-prompt, or tweak particular phrases or phrases in-app.

Inspire over mandate

Unavoidable and seemingly arbitrary friction, with out an evidence of the advantages or causes for the friction, may cause frustration. When doable, encourage customers to take part, fairly than requiring them to take action. To drive keen human participation, generative AI programs ought to be designed to:

- Present inspiration. Instance: supply output choices to select from.

- Foster exploration and experimentation. Instance: present the chance to simply iterate and micro-edit outputs.

- Prioritize informative guardrails over restrictive guardrails. Instance: flag potential danger. Empower customers to find out the suitable plan of action, fairly than utterly blocking them from taking a sure motion.

Embrace totally different strokes for various of us (and situations)

In our analysis, we prioritized inclusivity by actively involving neurodivergent customers and people with English as a second language as consultants in digital exclusion. We collaborated with them to check options that make sure the expertise advantages everybody from the outset.

This highlighted that totally different expertise, skills, conditions, and use circumstances name for various modes of interplay. Design for these variations, and/or design for optionality. Among the many methods to design generative AI programs with inclusivity in thoughts are:

- Prioritize multi-modality, or diverse modes of interplay.

- Assist person focus and assist them effectively obtain success. Instance: through in-app steerage and ideas.

- Mitigate language challenges. Instance: embrace spell-check options or suggestions mechanisms that don’t contain open text-fields.

Lastly, be sure to’re partaking customers in guiding the design of the system to make it work higher for them. Ask them questions like, how did that interplay really feel? Did it foster belief, help effectivity, really feel fulfilling?

In the end, thoughtfully embedding a human contact into your burgeoning AI system helps foster help and belief along with your crew. It would inspire the adoption of a brand new expertise that has potential to remodel what you are promoting.

Keep tuned for extra steerage and assets on designing for the human-at-the-helm coming within the new 12 months.

Trending Articles

3 Methods Generative AI Will Assist Entrepreneurs Join With Clients

3 min learn

Study AI Expertise on Trailhead

6 min learn

[ad_2]

Source link