Thirty years in the past, CPUs and different specialised processors dealt with nearly all computation duties. The graphics playing cards of that period helped to hurry up the drawing of 2D shapes in Home windows and functions, however served no different function.

Quick ahead to at the moment, and the GPU has turn into one of the dominant chips within the trade.

However lengthy gone are the times when the only real perform for a graphics chip was, graphics – mockingly – machine studying and high-performance compute closely rely upon the processing energy of the standard GPU. Be part of us as we discover how this single chip advanced from a modest pixel pusher right into a blazing powerhouse of floating-point computation.

Originally CPUs dominated all

Let’s journey again to the late Nineties. The realm of high-performance computing, encompassing scientific endeavors with supercomputers, information processing on normal servers, and engineering and design duties on workstations, relied totally on two forms of CPUs: 1) specialised processors designed for a singular function, and a pair of) off-the-shelf chips from AMD, IBM, or Intel.

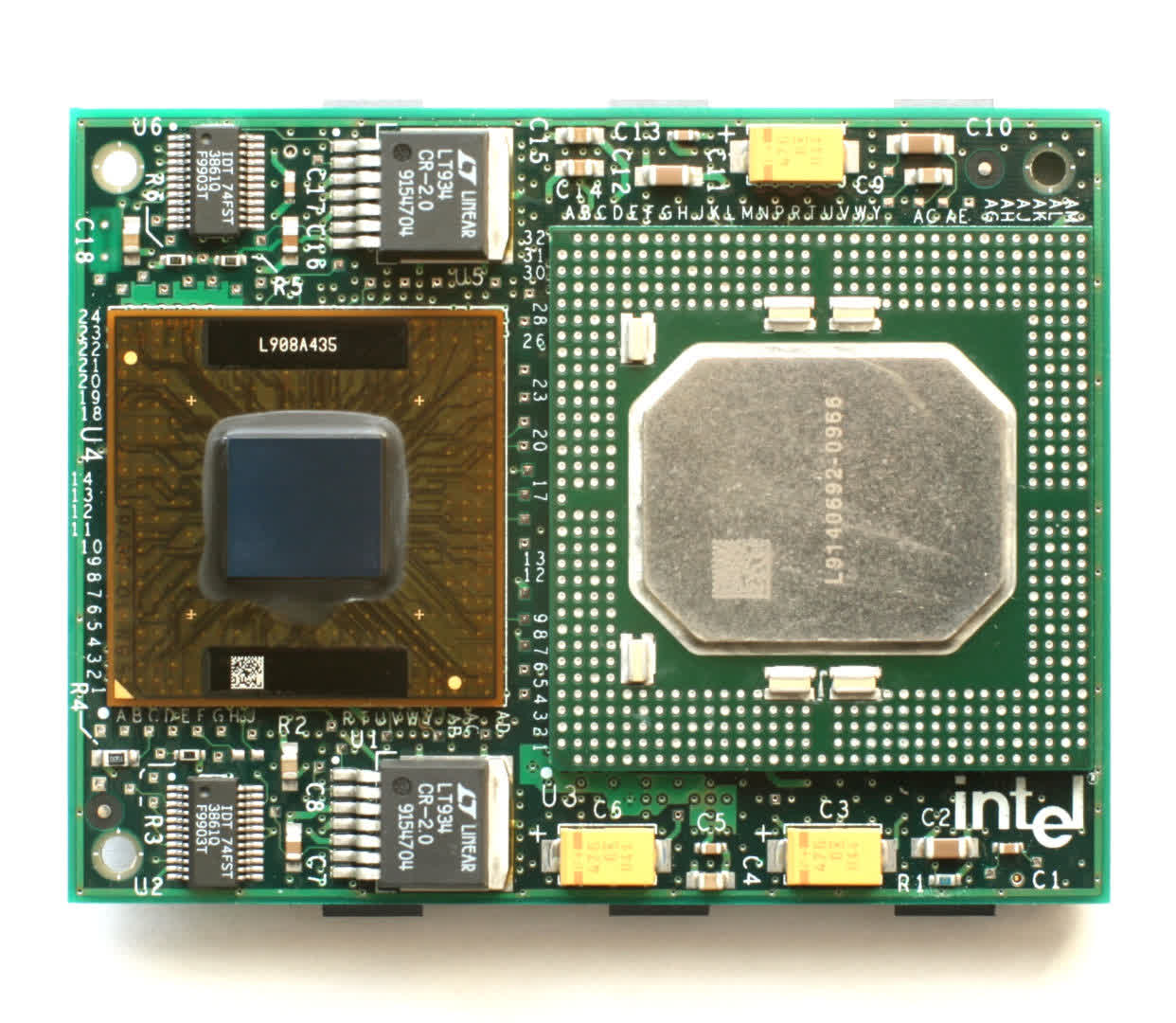

The ASCI Purple supercomputer was one of the highly effective round 1997, comprising 9,632 Intel Pentium II Overdrive CPUs (pictured beneath). With every unit working at 333 MHz, the system boasted a theoretical peak compute efficiency of simply over 3.2 TFLOPS (trillion floating level operations per second).

As we’ll be referring to TFLOPS typically on this article, it is price spending a second to clarify what it signifies. In laptop science, floating factors, or floats for brief, are information values that symbolize non-integer values, resembling 6.2815 or 0.0044. Complete values, generally known as integers, are used incessantly for calculations wanted to manage a pc and any software program working on it.

Floats are essential for conditions the place precision is paramount – particularly something associated to science or engineering. Even a easy calculation, resembling figuring out the circumference of a circle, entails no less than one floating level worth.

CPUs have had separate circuits for executing logic operations on integers and floats for a lot of many years. Within the case of the aforementioned Pentium II Overdrive, it might carry out one primary float operation (multiply or add) per clock cycle. In idea, because of this ASCI Purple had a peak floating level efficiency of 9,632 CPUs x 333 million clock cycles x 1 operation/cycle = 3,207,456 million FLOPS.

These figures are based mostly on ideally suited situations (e.g., utilizing the best directions on information that matches into the cache) and are hardly ever achievable in actual life. Nonetheless, they provide an excellent indication of the programs’ potential energy.

Different supercomputers boasted comparable numbers of ordinary processors – Blue Pacific at Lawrence Livermore Nationwide Laboratory used 5808 IBM’s PowerPC 604e chips and Los Alamos Nationwide Laboratory’s Blue Mountain (above) housed 6144 MIPS Applied sciences R1000s.

To succeed in teraflop-level processing, one wanted hundreds of CPUs, all supported by huge quantities of RAM and exhausting drive storage. This was, and nonetheless is, as a result of mathematical calls for of the machines.

After we are first launched to equations in physics, chemistry, and different topics at college, every part is one-dimensional. In different phrases, we use a single quantity for distance, velocity, mass, time, and so forth. Nonetheless, to precisely mannequin and simulate phenomena, extra dimensions are wanted, and the arithmetic ascends into the realm of vectors, matrices, and tensors.

These are handled as single entities in arithmetic however comprise a number of values, implying that any laptop working via the calculations must deal with quite a few numbers concurrently. On condition that CPUs again then might solely course of one or two floats per cycle, hundreds of them had been wanted.

SIMD enters the fray: MMX, 3DNow! and SSE

In 1997, Intel up to date the Pentium CPU collection with a expertise extension referred to as MMX – a set of directions that utilized eight further registers contained in the core. Each was designed to retailer between one to 4 integer values. This method allowed the processor to execute one instruction throughout a number of numbers concurrently, an method higher generally known as SIMD (Single Instruction, A number of Information).

A 12 months later, AMD launched its personal model referred to as 3DNow!. It was notably superior, because the registers might retailer floating level values. It took one other 12 months earlier than Intel addressed this situation in MMX, with the introduction of SSE (Streaming SIMD Extensions) within the Pentium III.

Because the calendar rolled into a brand new millennium, designers of high-performance computer systems had entry to plain processors that might effectively deal with vector arithmetic.

As soon as scaled into the hundreds, these processors might handle matrices and tensors equally nicely. Regardless of this development, the world of supercomputers nonetheless favored older or specialised chips, as these new extensions weren’t exactly designed for such duties. This was additionally true for an additional quickly popularizing processor higher at SIMD work than any CPU from AMD or Intel: the GPU.

This was additionally true for an additional quickly popularizing processor higher at SIMD work than any CPU from AMD or Intel: the GPU.

Within the early years of graphics processors, the CPU processed the calculations for the triangles composing a scene (therefore the 3DNow! identify that AMD used for its implementation of SIMD). Nonetheless, the coloring and texturing of pixels had been solely dealt with by the GPU, and plenty of features of this work concerned vector arithmetic.

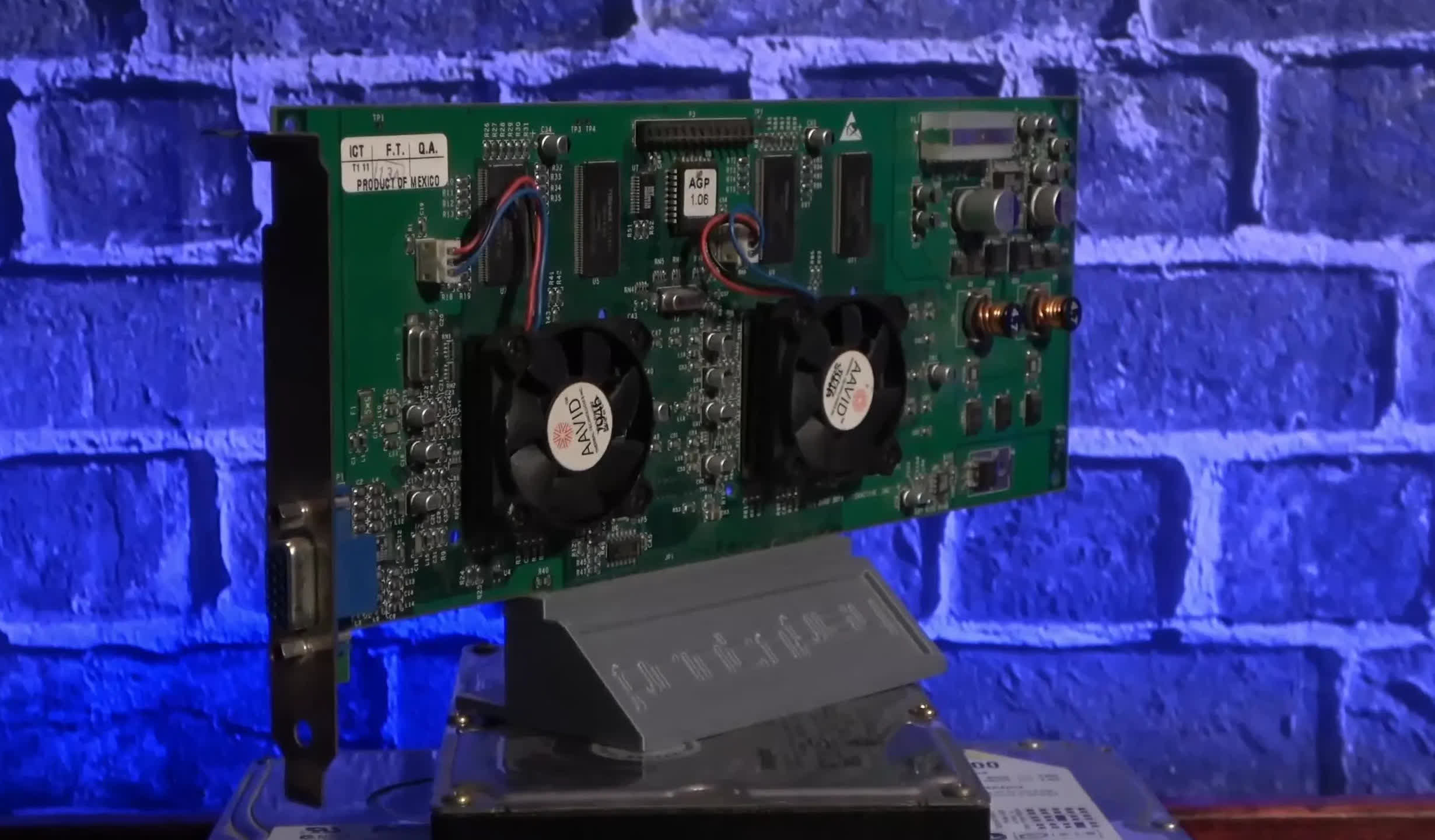

The perfect consumer-grade graphics playing cards from 20+ years in the past, such because the 3dfx Voodoo5 5500 and the Nvidia GeForce 2 Extremely, had been excellent SIMD gadgets. Nonetheless, they had been created to provide 3D graphics for video games and nothing else. Even playing cards within the skilled market had been solely centered on rendering.

ATI’s $2,000 ATI FireGL 3 sported two IBM chips (a GT1000 geometry engine and an RC1000 rasterizer), an unlimited 128 MB of DDR-SDRAM, and a claimed 30 GFLOPS of processing energy. However all that was for accelerating graphics in packages like 3D Studio Max and AutoCAD, utilizing the OpenGL rendering API.

GPUs of that period weren’t geared up for different makes use of, because the processes behind remodeling 3D objects and changing them into monitor pictures did not contain a considerable quantity of floating level math. The truth is, a major a part of it was on the integer stage, and it could take a number of years earlier than graphics playing cards began closely working with floating level values all through their pipelines.

One of many first was ATI’s R300 processor, which had 8 separate pixel pipelines, dealing with the entire math at 24-bit floating level precision. Sadly, there was no approach of harnessing that energy for something apart from graphics – the {hardware} and related software program had been totally image-centric.

Pc engineers weren’t oblivious to the truth that GPUs had huge quantities of SIMD energy however lacked a technique to apply it in different fields. Surprisingly, it was a gaming console that confirmed resolve this thorny downside.

A brand new period of unification

Microsoft’s Xbox 360 hit the cabinets in November 2005, that includes a CPU designed and manufactured by IBM based mostly on the PowerPC structure, and a GPU designed by ATI and fabricated by TSMC.

This graphics chip, codenamed Xenos, was particular as a result of its structure fully eschewed the traditional method of separate vertex and pixel pipelines.

Xenos sparked a design paradigm that continues to be in use at the moment.

Of their place was a three-way cluster of SIMD arrays. Particularly, every cluster consisted of 16 vector processors, with every containing 5 math models. This structure enabled every array to execute two sequential directions from a thread, per cycle, on 80 floating level information values concurrently.

Referred to as a unified shader structure, every array might course of any kind of shader. Regardless of making different features of the chip extra sophisticated, Xenos sparked a design paradigm that continues to be in use at the moment. With a clock velocity of 500 MHz, your entire cluster might theoretically obtain a processing price of 240 GFLOPS (500 x 16 x 80 x 2) for 3 threads of a multiply-then-add command.

To present this determine some sense of scale, among the world’s high supercomputers a decade earlier could not match this velocity. For example, the aragon XP/S140 at Sandia Nationwide Laboratories, which topped the world’s supercomputer listing in 1994 with its 3,680 Intel i860 CPUs, had a peak of 184 GFLOPS. The tempo of chip growth shortly outpaced this machine, however the identical could be true of the GPU.

CPUs had been incorporating their very own SIMD arrays for a number of years – for instance, Intel’s unique Pentium MMX had a devoted unit for executing directions on a vector, encompassing as much as eight 8-bit integers. By the point Xbox’s Xenos was being utilized in houses worldwide, such models had no less than doubled in measurement, however they had been nonetheless minuscule in comparison with these in Xenos.

When consumer-grade graphics playing cards started to function GPUs with a unified shader structure, they already boasted a noticeably greater processing price than the Xbox 360’s graphics chip.

Nvidia’s G80 (above), as used within the GeForce 8800 GTX (2006), had a theoretical peak of 346 GLFOPS, and ATI’s R600 within the Radeon HD 2900 XT (2007) boasted 476 GLFOPS.

Each graphics chip makers shortly capitalized on this computing energy of their skilled fashions. Whereas exorbitantly priced, the ATI FireGL V8650 and Nvidia Tesla C870 had been well-suited for high-end scientific computer systems. Nonetheless, on the highest stage, supercomputers worldwide continued to depend on normal CPUs. The truth is, a number of years would cross earlier than GPUs began showing in probably the most highly effective programs.

However why had been GPUs weren’t used immediately, once they clearly supplied an unlimited quantity of processing velocity?

Supercomputers and comparable programs are extraordinarily costly to design, assemble, and function. For years, that they had been constructed round large arrays of CPUs, so integrating one other processor wasn’t an in a single day endeavor. Such programs required thorough planning and preliminary small-scale testing earlier than rising the chip depend.

Secondly, getting all these parts to perform harmoniously, particularly concerning software program, is not any small feat, which was a major weak spot for GPUs at the moment. Whereas that they had turn into extremely programmable, the software program beforehand obtainable for them was slightly restricted.

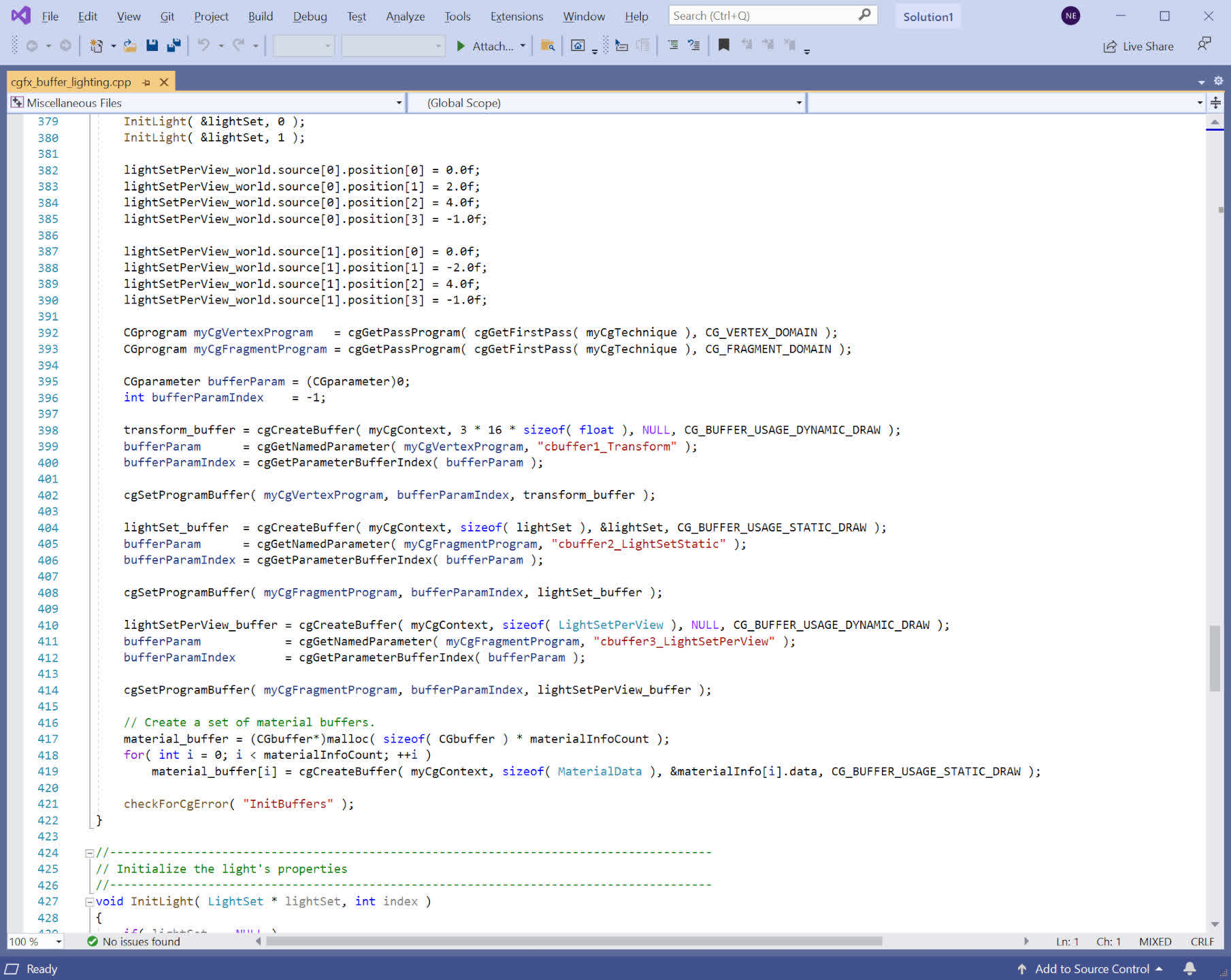

Microsoft’s HLSL (Greater Stage Shader Language), Nvidia’s Cg library, and OpenGL’s GLSL made it easy to entry the processing functionality of a graphics chip, although purely for rendering.

That every one modified with unified shader structure GPUs.

In 2006, ATI, which by then had turn into a subsidiary of AMD, and Nvidia launched software program toolkits aimed toward exposing this energy for extra than simply graphics, with their APIs referred to as CTM (Shut To Steel) and CUDA (Compute Unified Machine Structure), respectively.

What the scientific and information processing group really wanted, nevertheless, was a complete bundle – one that may deal with huge arrays of CPUs and GPUs (also known as a heterogeneous platform) as a single entity comprised of quite a few compute gadgets.

Their want was met in 2009. Initially developed by Apple, OpenCL was launched by the Khronos Group, who had absorbed OpenGL a number of years earlier, to turn into the de facto software program platform for utilizing GPUs outdoors of on a regular basis graphics or as the sector was then identified by, the GPGPU which referred to general-purpose computing on GPUs, a time period coined by Mark Harris.

The GPU enters the compute race

Not like the expansive world of tech evaluations, there aren’t a whole bunch of reviewers globally testing supercomputers for his or her supposed efficiency claims. Nonetheless, an ongoing venture that began within the early Nineties by the College of Mannheim in Germany seeks to do exactly that.

Referred to as the TOP500, the group releases a ranked listing of the ten strongest supercomputers on this planet twice a 12 months.

The primary entries boasting GPUs appeared in 2010, with two programs in China – Nebulae and Tianhe-1. These relied on Nvidia’s Tesla C2050 (primarily a GeForce GTX 470, as proven within the image beneath) and AMD’s Radeon HD 4870 chips, respectively, with the previous boasting a theoretical peak of two,984 TFLOPS.

Throughout these early days of high-end GPGPU, Nvidia was the popular vendor for outfitting a computing behemoth, not due to efficiency – as AMD’s Radeon playing cards often supplied the next diploma of processing efficiency – however resulting from software program assist. CUDA underwent speedy growth, and it could be a number of years earlier than AMD had an appropriate various, encouraging customers to go together with OpenCL as a substitute.

Nonetheless, Nvidia did not totally dominate the market, as Intel’s Xeon Phi processor tried to carve out a spot. Rising from an aborted GPU venture named Larrabee, these large chips had been a peculiar CPU-GPU hybrid, composed of a number of Pentium-like cores (the CPU half) paired with massive floating-point models (the GPU half).

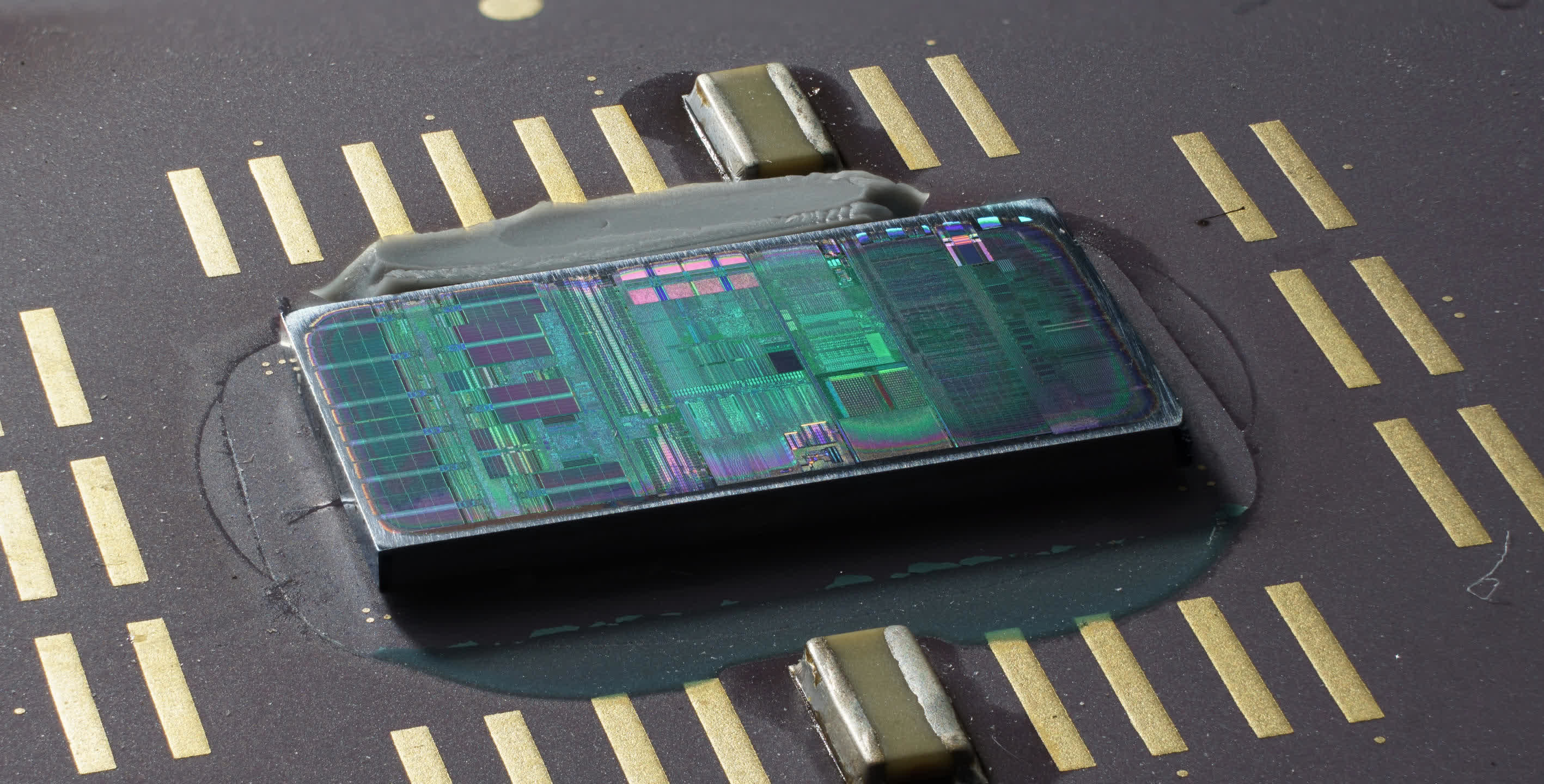

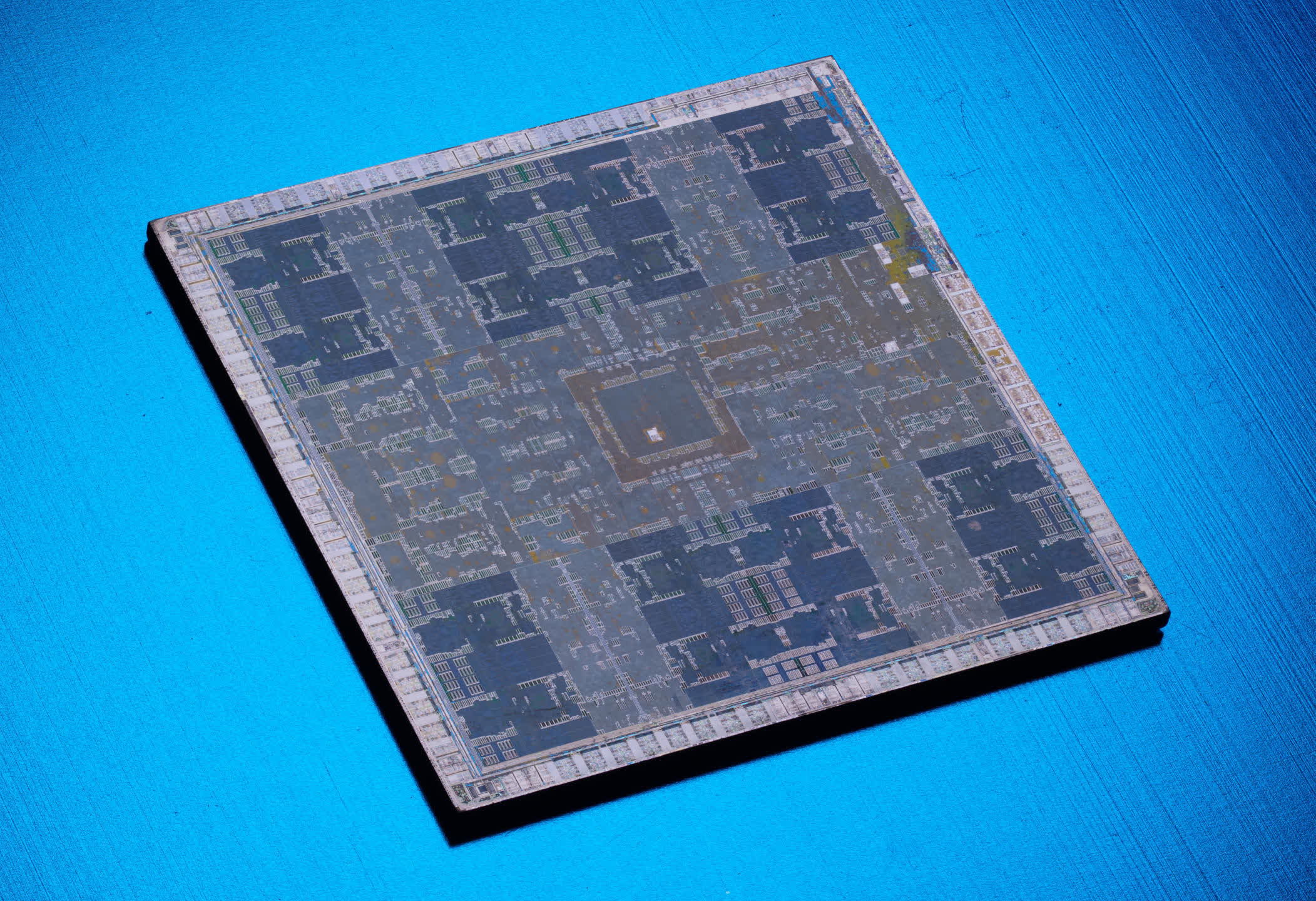

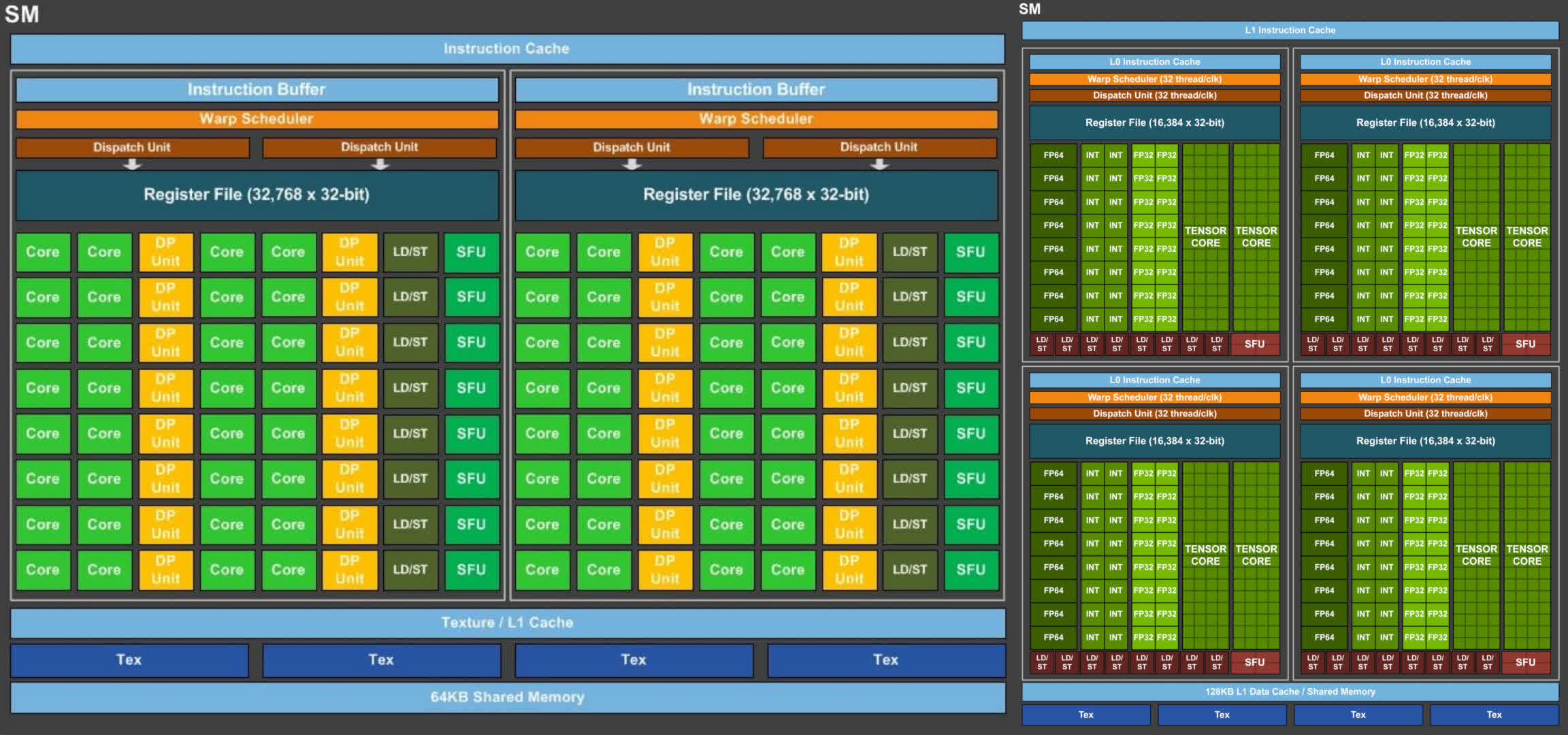

An examination of Nvidia Tesla C2050’s internals reveals 14 blocks referred to as Streaming Multiprocessors (SMs), divided by cache and a central controller. Each consists of 32 units of two logic circuits (which Nvidia calls CUDA cores) that execute all of the mathematical operations – one for integer values, and the opposite for floats. Within the latter’s case, the cores can handle one FMA (Fused Multiply-Add) operation per clock cycle at single (32-bit) precision; double precision (64-bit) operations require no less than two clock cycles.

The floating-point models within the Xeon Phi chip (proven beneath) seem considerably comparable, besides every core processes half as many information values because the SMs within the C2050. Nonetheless, as there are 32 repeated cores in comparison with the Tesla’s 14, a single Xeon Phi processor can deal with extra values per clock cycle total. Nonetheless, Intel’s first launch of the chip was extra of a prototype and could not absolutely understand its potential – Nvidia’s product ran quicker, consumed much less energy, and proved to be in the end superior.

This may turn into a recurring theme within the three-way GPGPU battle amongst AMD, Intel, and Nvidia. One mannequin would possibly possess a superior variety of processing cores, whereas one other might need a quicker clock velocity, or a extra strong cache system.

Whereas a single CPU could not compete with the SIMD efficiency of a mean GPU, when linked collectively within the hundreds, they proved ample. Nonetheless, such programs lacked energy effectivity.

CPUs remained important for all sorts of computing, and plenty of supercomputers and high-end computing programs nonetheless consisted of AMD or Intel processors. Whereas a single CPU could not compete with the SIMD efficiency of a mean GPU, when linked collectively within the hundreds, they proved ample. Nonetheless, such programs lacked energy effectivity.

For instance, on the similar time that the Radeon HD 4870 GPU was getting used within the Tianhe-1 supercomputer, AMD’s greatest server CPU (the 12-core Opteron 6176 SE) was going the rounds. For an influence consumption of round 140 W, the CPU might theoretically hit 220 GFLOPS, whereas the GPU supplied a peak of 1,200 GFLOPS for simply an additional 10 W, and at a fraction of the fee.

A bit of graphics card that might (do extra)

Just a few years later and it wasn’t solely the world’s supercomputers that had been leveraging GPUs to conduct parallel calculations en masse. Nvidia was actively selling its GRID platform, a GPU virtualization service, for scientific and different functions. Initially launched as a system to host cloud-based gaming, the rising demand for large-scale, inexpensive GPGPU made this transition inevitable. At its annual expertise convention, GRID was offered as a major instrument for engineers throughout varied sectors.

In the identical occasion, the GPU maker supplied a glimpse right into a future structure, codenamed Volta. Few particulars had been launched, and the overall assumption was that this is able to be one other chip serving throughout all of Nvidia’s markets.

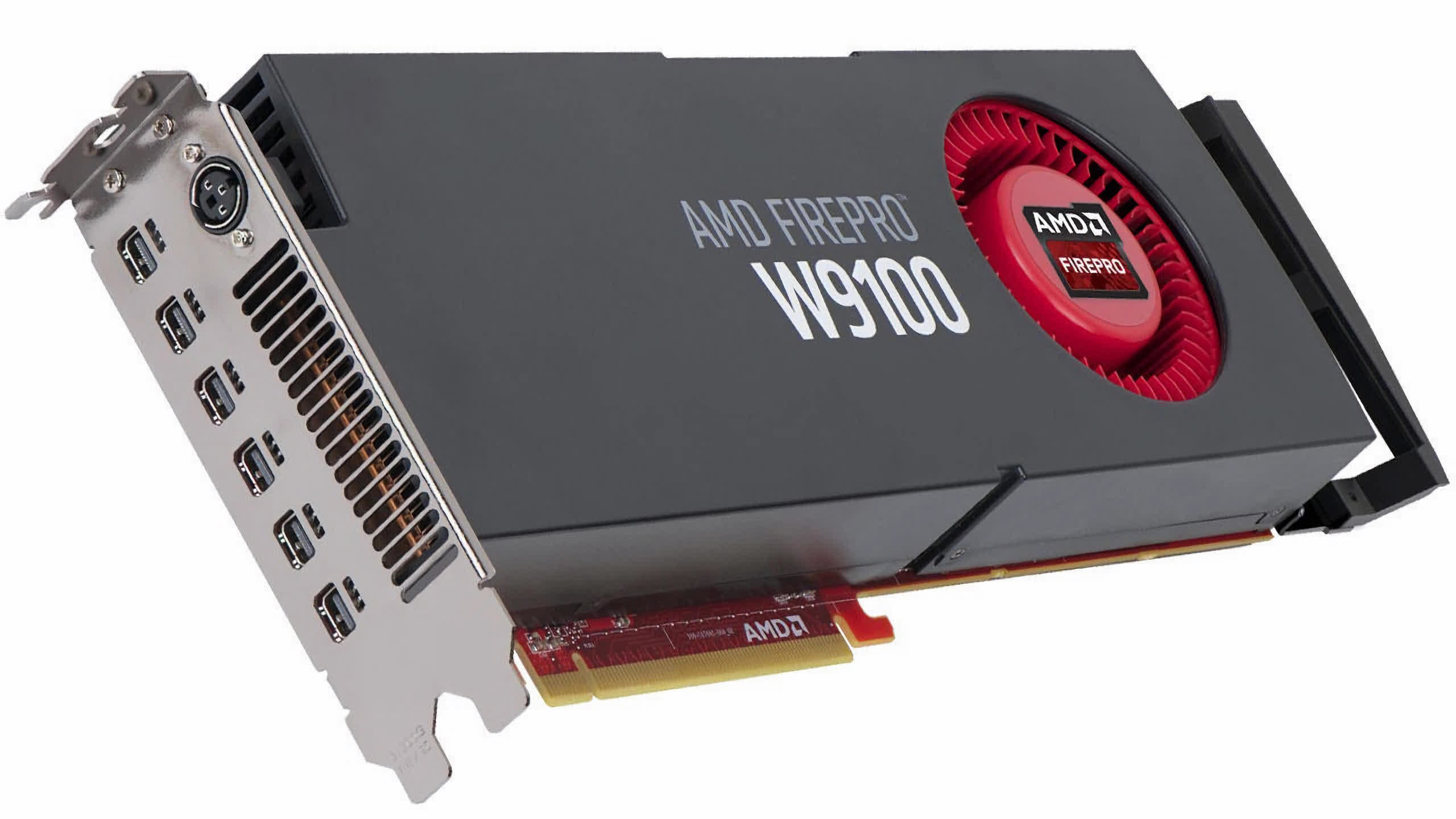

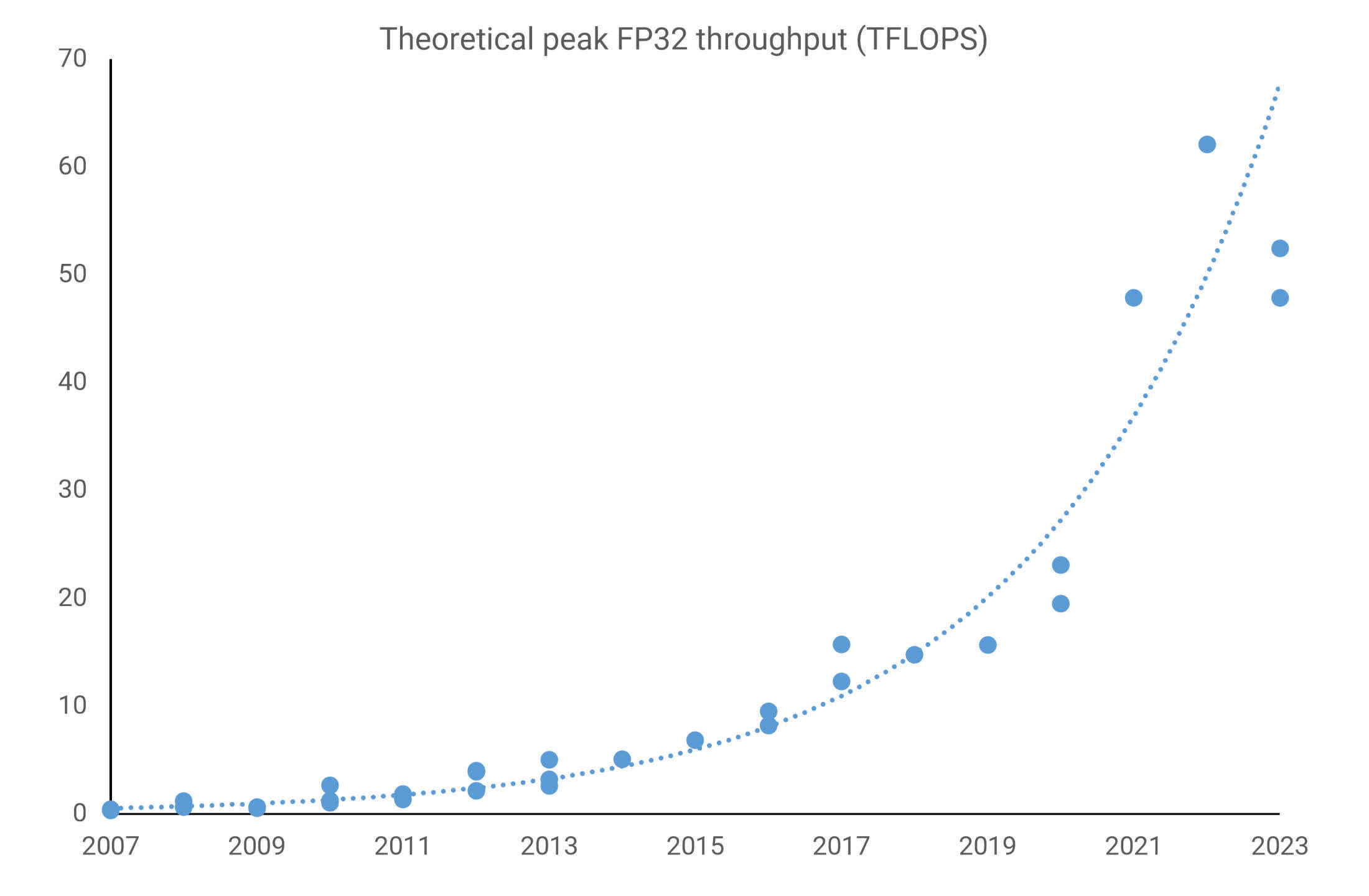

In the meantime, AMD was doing one thing comparable, using its frequently up to date Graphics Core Subsequent (GCN) design in its gaming-focused Radeon lineup, in addition to its FirePro and Radeon Sky server-based playing cards. By then, the efficiency figures had been astonishing – the FirePro W9100 had a peak FP32 throughput of 5.2 TFLOPS (32-bit floating level), a determine that may have been unthinkable for a supercomputer lower than twenty years earlier.

GPUs had been nonetheless primarily designed for 3D graphics, however developments in rendering applied sciences meant that these chips needed to turn into more and more proficient at dealing with common compute workloads. The one situation was their restricted functionality for high-precision floating-point math, i.e., FP64 or larger.

Trying on the high supercomputers of 2015 exhibits a comparatively small quantity utilizing GPUs, both Intel’s Xeon Phi or Nvidia’s Tesla, in contrast to those who had been totally CPU-based.

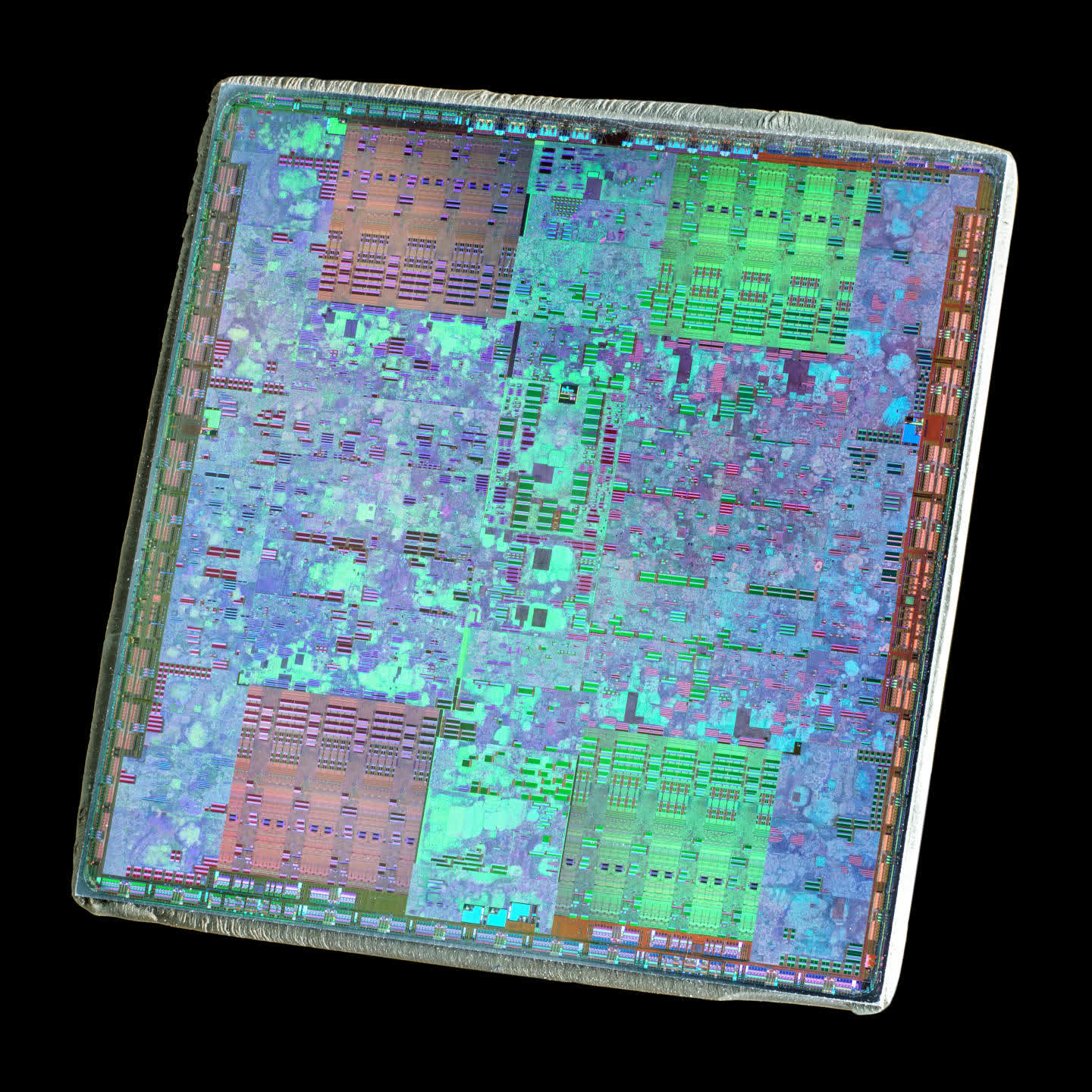

That every one modified when Nvidia launched the Pascal structure in 2016. This was the corporate’s first foray into designing a GPU solely for the high-performance computing market, with others getting used throughout a number of sectors. Solely one of many former was ever made (the GP100) and it spawned solely 5 merchandise, however the place all earlier architectures solely sported a handful of FP64 cores, this chip housed almost 2,000 of them.

With the Tesla P100 providing over 9 TFLOPS of FP32 processing and half that determine for FP64, it was critically highly effective. AMD’s Radeon Professional W9100, utilizing the Vega 10 chip, was 30% quicker in FP32 however 800% slower in FP64. By this level, Intel was getting ready to discontinuing Xeon Phi resulting from poor gross sales.

A 12 months later, Nvidia lastly launched Volta, making it instantly obvious that the corporate wasn’t solely curious about introducing its GPUs to the HPC and information processing markets – it was concentrating on one other one as nicely.

Neurons, networks, oh my!

Deep Studying is a discipline throughout the broader set of disciplines generally known as Machine Studying, which in flip is a subset of Synthetic Intelligence. It entails the usage of advanced mathematical fashions, generally known as neural networks, that extract info from given information.

An instance of that is figuring out the likelihood {that a} offered picture depicts a particular animal. To do that, the mannequin must be ‘educated’ – on this instance, proven tens of millions of pictures of that animal, together with tens of millions extra that don’t present the animal. The arithmetic concerned is rooted in matrix and tensor computations.

For many years, such workloads had been solely appropriate for large CPU-based supercomputers. Nonetheless, as early because the 2000s, it was obvious that GPUs had been ideally suited to such duties.

Nonetheless, Nvidia gambled on a major enlargement of the deep studying market and added an additional function to its Volta structure to make it stand out on this discipline. Marketed as tensor cores, these had been banks of FP16 logic models, working collectively as a big array, however with very restricted capabilities.

The truth is, they had been so restricted that they carried out only one perform: multiplying two FP16 4×4 matrices collectively after which including one other FP16 or FP32 4×4 matrix to the end result (a course of generally known as a GEMM operation). Nvidia’s earlier GPUs, in addition to these from rivals, had been additionally able to performing such calculations however nowhere close to as shortly as Volta. The only GPU made utilizing this structure, the GV100, housed a complete of 512 tensor cores, every able to executing 64 GEMMs per clock cycle.

Relying on the dimensions of the matrices within the dataset, and the floating level measurement used, the Tesla V100 card might theoretically attain 125 TFLOPS in these tensor calculations. Volta was clearly designed for a distinct segment market, however the place the GP100 made restricted inroads into the supercomputer discipline, the brand new Tesla fashions had been quickly adopted.

PC lovers might be conscious that Nvidia subsequently added tensor cores to its common shopper merchandise within the ensuing Turing structure, and developed an upscaling expertise referred to as DLSS (Deep Studying Tremendous Sampling), which makes use of the cores within the GPU to run a neural community on an upscaling picture, correcting any artifacts within the body.

For a quick interval, Nvidia had the GPU-accelerated deep studying market to itself, and its information heart division noticed revenues surge – with development charges of 145% in FY17, 133% in FY18, and 52% in FY19. By the top of FY19, gross sales for HPC, deep studying, and others totaled $2.9 billion.

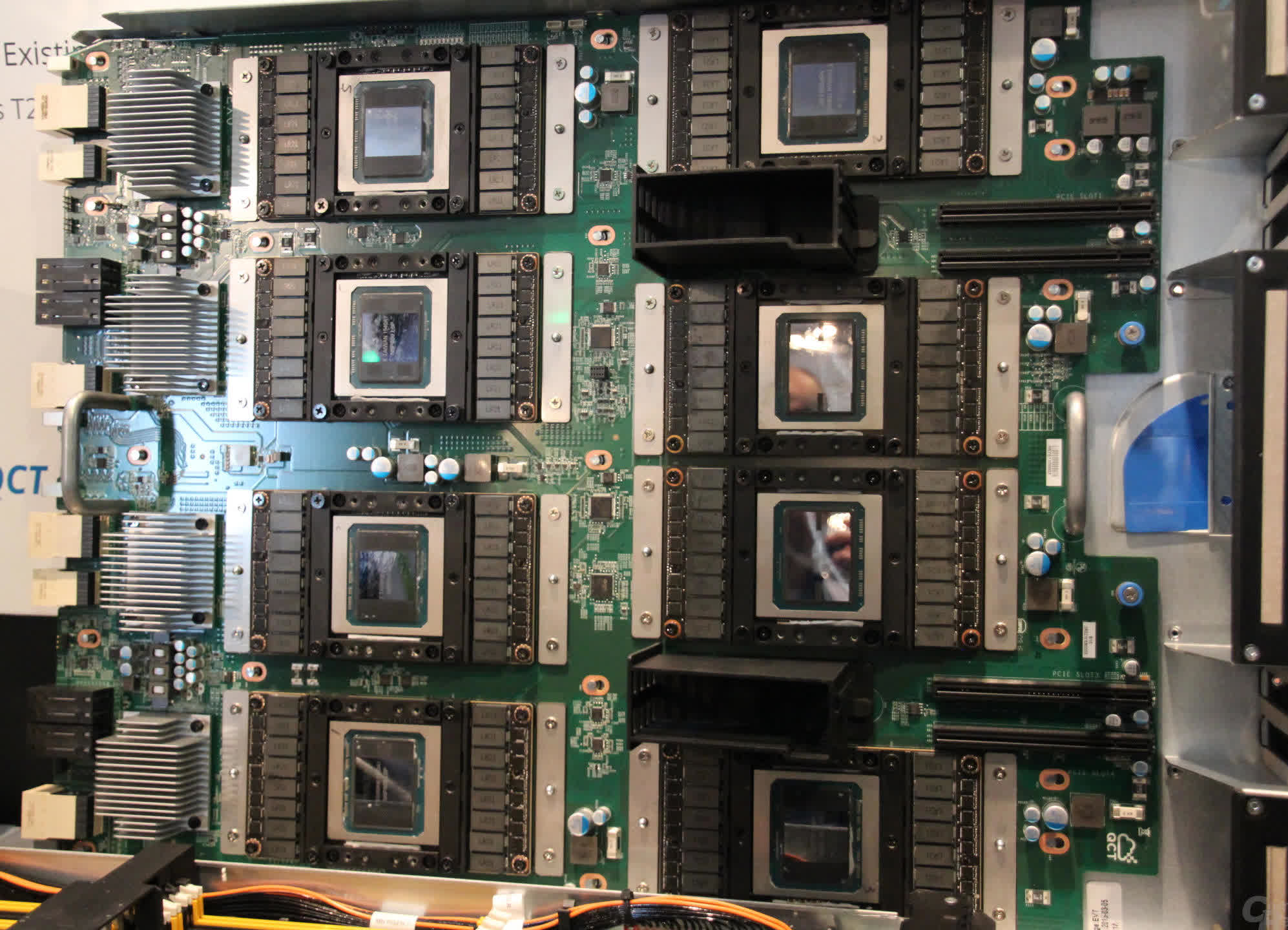

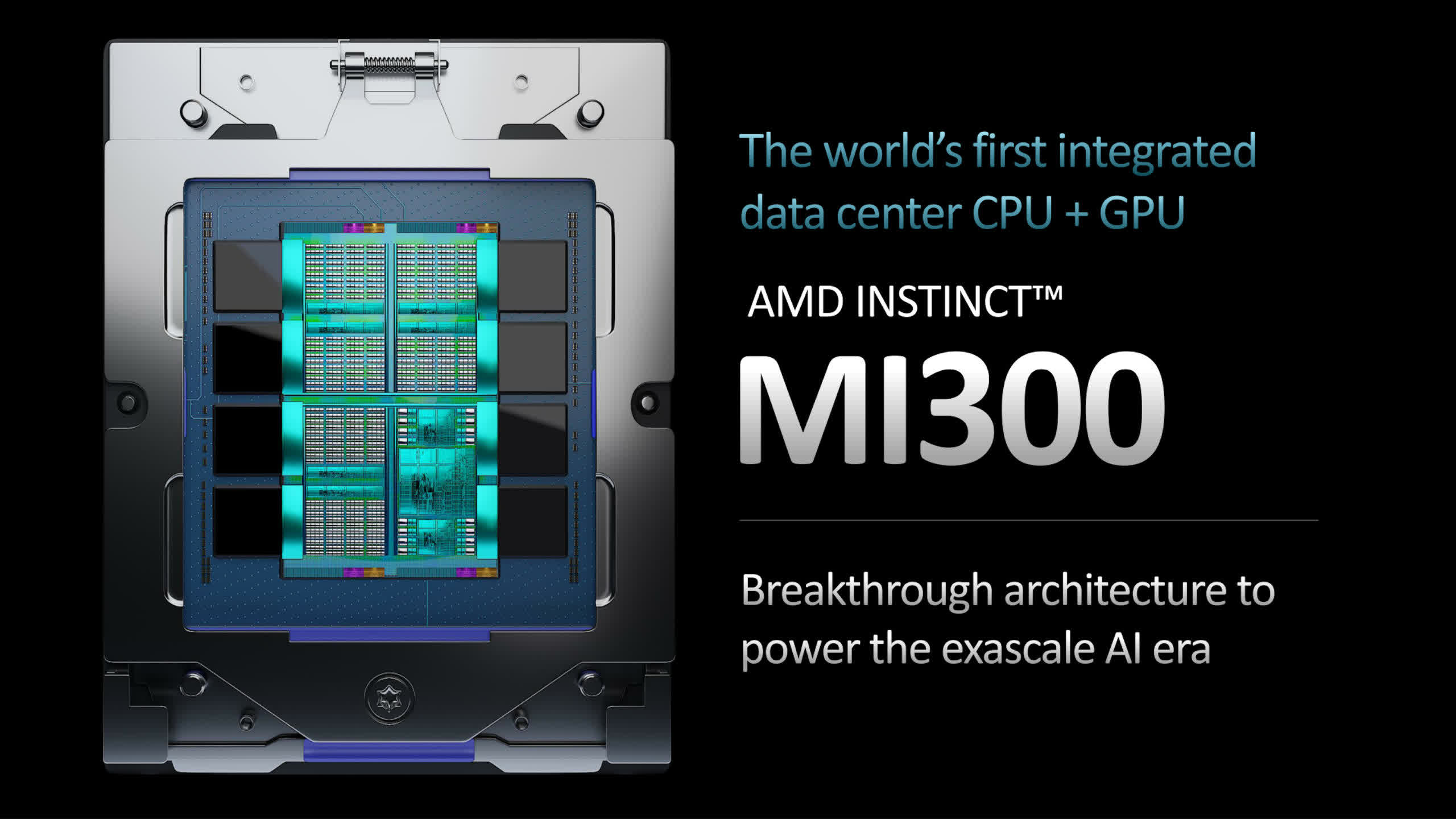

Nonetheless, the place there’s cash, competitors is inevitable. In 2018, Google started providing entry to its personal tensor processing chips, which it had developed in-house, through a cloud service. Amazon quickly adopted swimsuit with its specialised CPU, the AWS Graviton. In the meantime, AMD was restructuring its GPU division, forming two distinct product strains: one predominantly for gaming (RDNA) and the opposite solely for computing (CDNA).

Whereas RDNA was notably totally different from its predecessor, CDNA was very a lot a pure evolution of GCN, albeit one scaled to an unlimited stage. Taking a look at at the moment’s GPUs for supercomputers, information servers, and AI machines, every part is big.

AMD’s CDNA 2-powered MI250X sports activities 220 Compute Models, offering just below 48 TFLOPS of double-precision FP64 throughput and 128 GB of Excessive Bandwidth Reminiscence (HBM2e), with each features being a lot wanted in HPC functions. Nvidia’s GH100 chip, utilizing its Hopper structure and 576 Tensor Cores, can doubtlessly hit 4000 TOPS, with the low-precision INT8 quantity format in AI matrix calculations.

Nonetheless, one factor all of them share is what they’re decidedly not – they don’t seem to be GPUs.

Intel’s Ponte Vecchio GPU is equally gargantuan, with 100 billion transistors, and AMD’s MI300 has 46 billion extra, comprising a number of CPU, graphics, and reminiscence chiplets.

Nonetheless, one factor all of them share is what they’re decidedly not – they don’t seem to be GPUs. Lengthy earlier than Nvidia appropriated the time period as a advertising instrument, the acronym stood for Graphics Processing Unit. AMD’s MI250X has no render output models (ROPs) in any respect, and even the GH100 solely possesses the Direct3D efficiency of one thing akin to a GeForce GTX 1050, rendering the ‘G’ in GPU irrelevant.

So, what might we name them as a substitute?

“GPGPU” is not ideally suited, as it’s a clumsy phrase referring to utilizing a GPU in generalized computing, not the gadget itself. “HPCU” (Excessive Efficiency Computing Unit) is not significantly better. However maybe it would not actually matter.

In any case, the time period “CPU” is extremely broad and encompasses a wide selection of various processors and makes use of.

What’s subsequent for the GPU to beat?

With billions of {dollars} invested in GPU analysis and growth by Nvidia, AMD, Apple, Intel, and dozens of different firms, the graphics processor of at the moment is not going to get replaced by something drastically totally different anytime quickly.

For rendering, the most recent APIs and software program packages that use them (resembling recreation engines and CAD functions) are typically agnostic towards the {hardware} that runs the code, so in idea, they may very well be tailored to one thing totally new.

There are comparatively few parts inside a GPU devoted solely to graphics… the remaining is actually a massively parallel SIMD chip, supported by a sturdy and complicated reminiscence system.

Nonetheless, there are comparatively few parts inside a GPU devoted solely to graphics – the triangle setup engine and ROPs are the obvious ones, and ray tracing models in newer releases are extremely specialised, too. The remainder, nevertheless, is actually a massively parallel SIMD chip, supported by a sturdy and complicated reminiscence/cache system.

The basic designs are about pretty much as good as they’re ever going to get and any future enhancements are merely tied to on advances in semiconductor fabrication strategies. In different phrases, they will solely enhance by housing extra logic models, working at the next clock velocity, or a mixture of each.

In fact, they will have new options included to permit them to perform in a broader vary of situations. This has occurred a number of occasions all through the GPU’s historical past, although the transition to a unified shader structure was notably vital. Whereas it is preferable to have devoted {hardware} for dealing with tensors or ray tracing calculations, the core of a contemporary GPU is able to managing all of it, albeit at a slower tempo.

Because of this the likes of the AMD MI250 and Nvidia GH100 bear a robust resemblance to their desktop PC counterparts, and future designs meant to be used in HPC and AI are more likely to comply with this development. So if the chips themselves aren’t going to alter considerably, what about their utility?

On condition that something associated to AI is actually a department of computation, a GPU is probably going for use each time there is a must carry out a large number of SIMD calculations. Whereas there aren’t many sectors in science and engineering the place such processors aren’t already being utilized, what we’re more likely to see is a surge in the usage of GPU-derivatives.

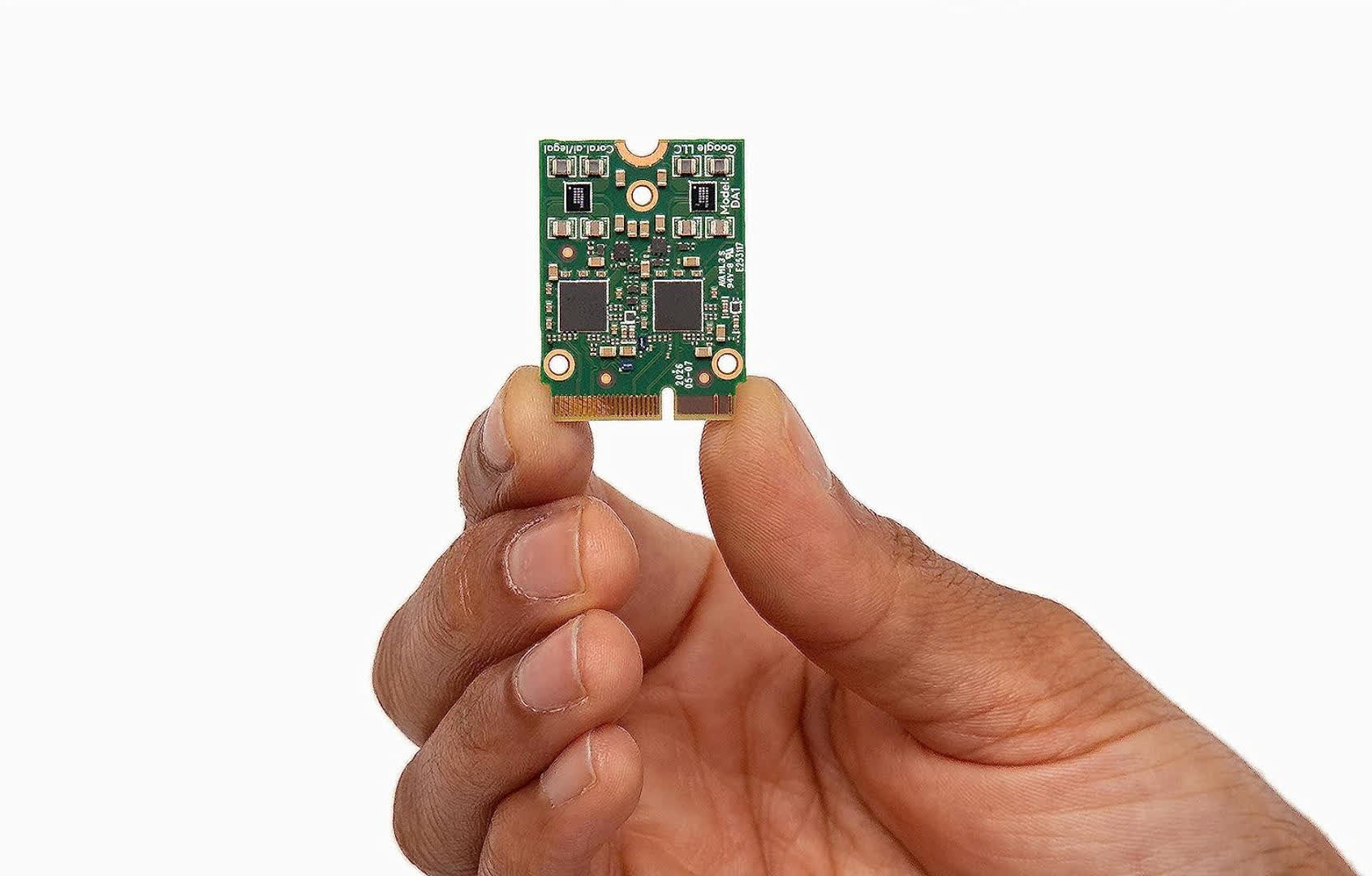

One can at present buy telephones geared up with miniature chips whose sole perform is to speed up tensor calculations. As instruments like ChatGPT proceed to develop in energy and recognition, we’ll see extra gadgets that includes such {hardware}.

The standard GPU has advanced from a tool merely meant to run video games quicker than a CPU alone might, to a common accelerator, powering workstations, servers, and supercomputers across the globe.

The standard GPU has advanced from a tool merely meant to run video games quicker than a CPU alone might, to a common accelerator, powering workstations, servers, and supercomputers across the globe.

Thousands and thousands of individuals worldwide use one daily – not simply in our computer systems, telephones, televisions, and streaming gadgets, but additionally once we make the most of providers that incorporate voice and picture recognition, or present music and video suggestions.

What’s really subsequent for the GPU could also be uncharted territory, however one factor is for certain, the graphics processing unit will proceed to be the dominant instrument for computation and AI for a lot of many years to return.