The large image: Generative AI merchandise are sometimes designed to simply create nonetheless pictures or textual content snippets by decoding customers’ directions. The corporate previously often called Fb desires to increase this functionality to incorporate audio and music content material within the equation.

Meta lately launched AudioCraft, its framework to generate “high-quality,” real looking audio and music, with an open-source license. The know-how is designed to handle a niche within the generative AI market, the place audio creation has traditionally lagged behind. Whereas some progress has already been made on this space, the corporate acknowledges that current options are extremely difficult, not very open, and never simply accessible for experimentation.

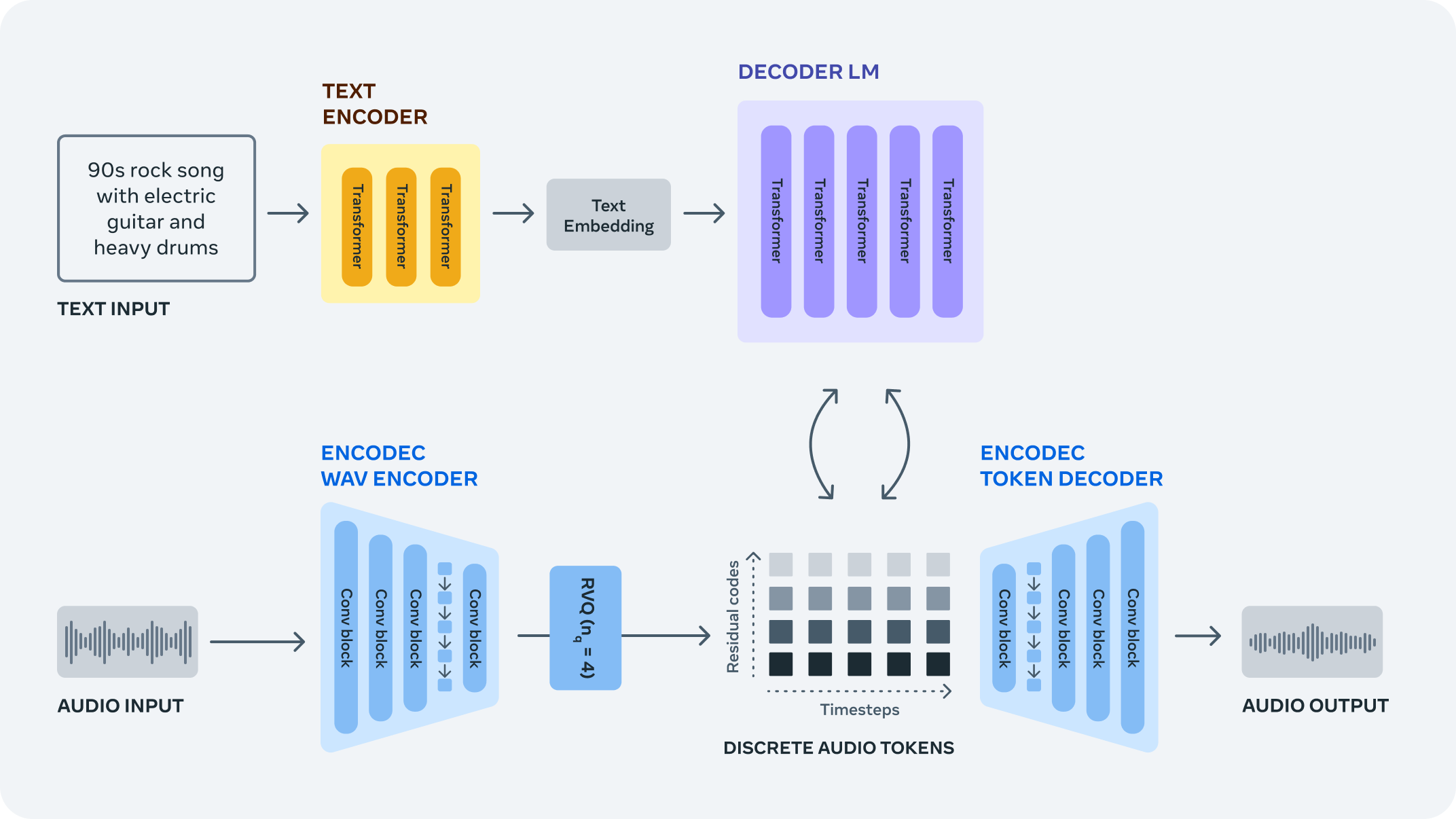

The AudioCraft framework is a PyTorch library for deep studying analysis on audio era, comprising three foremost elements: MusicGen, AudioGen, and EnCodec. Based on Meta, MusicGen generates music from text-based consumer inputs, whereas AudioGen is designed to create audio results. EnCodec, which was launched in 2022, is a strong encoding know-how able to “hypercompressing” audio streams.

The MusicGen AI mannequin can generate catchy tunes and songs from scratch. Meta is offering some examples generated from textual content prompts equivalent to “Pop dance observe with catchy melodies, tropical percussions, and upbeat rhythms, excellent for the seashore,” or “Earthy tones, environmentally aware, ukulele-infused, harmonic, breezy, easygoing, natural instrumentation, light grooves.”

AudioGen can be utilized to generate environmental background audio results, equivalent to a canine barking or a siren approaching and passing the listener. The open-source launch of EnCodec is an improved model of the codec showcased in 2022, because it now permits for higher-quality music era with fewer artifacts.

AudioCraft offers a simplified method to audio era, which has at all times been a problem. Creating any sort of high-fidelity audio requires modeling complicated alerts and patterns at various scales, the corporate explains. Music is essentially the most difficult kind of audio to generate, because it consists of native and long-range patterns. Earlier fashions used symbolic representations like MIDI or piano rolls to generate content material, Meta explains, however this method falls brief when making an attempt to seize all of the “expressive nuances and stylistic components” present in music.

Meta states that MusicGen was educated on roughly 400,000 recordings together with textual content descriptions and metadata. The mannequin absorbed 20,000 hours of music instantly owned by the corporate or licensed particularly for this objective. In comparison with OpenAI and different generative fashions, Meta seems to be striving to keep away from any licensing controversies or potential authorized points associated to unethical coaching practices.