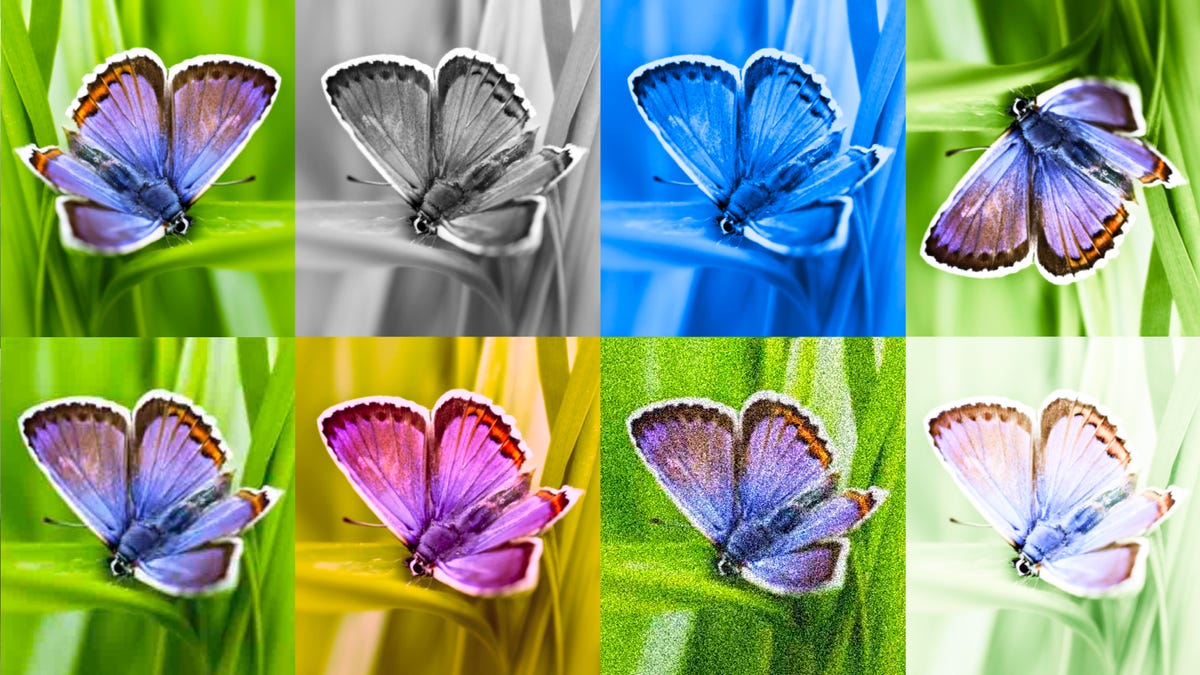

The software can detect AI-generated photos even after enhancing, altering colours, or including filters. Google DeepMind

Pictures generated by synthetic intelligence instruments have gotten more durable to tell apart from these people have created. AI-generated photos can proliferate misinformation in huge proportions, resulting in the irresponsible use of AI. To that goal, Google unveiled a brand new SynthID software that may differentiate AI-generated photos from human-created ones.

The software, created by the DeepMind staff, provides an imperceptible digital watermark to AI-generated photos — like a signature. The identical software can later detect this watermark to level out which photos have been created by AI, even after modifications, like including filters, compressing, altering colours, and extra.

Additionally: How Google, UCLA are prompting AI to decide on the following motion for a greater reply

SynthID combines two deep studying fashions into one software. One visually provides the watermark to the unique content material in an imperceptible method to the bare eye and one other identifies the watermarked photos.

Presently, SynthID can’t detect all AI-generated photos, as it’s restricted to these created with Google’s text-to-image software, Imagen. However it is a signal of a promising future for accountable AI, particularly if different corporations undertake SynthID into their generative AI instruments.

Additionally: Google’s AI-powered search abstract now factors you to its on-line sources

The software will progressively roll out to Vertex AI clients utilizing Imagen and is simply accessible on this platform. Nonetheless, Google DeepMind hopes to make it accessible in different Google merchandise and to 3rd events quickly.